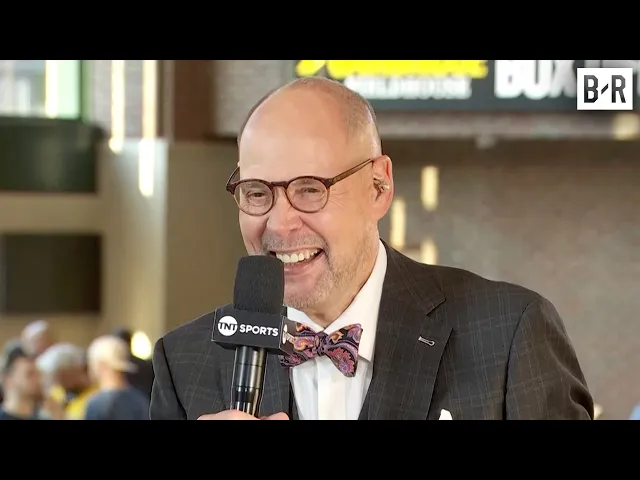

Google AI Result Says Ernie Johnson is Black 😂

AI mishaps reveal digital bias problem

In the world of sports commentary, few shows carry the cultural weight and viewer loyalty of TNT's "Inside the NBA." A recent segment from the show has gone viral, highlighting a significant issue with artificial intelligence that business leaders should take note of. When the crew discovered that Google's AI system incorrectly identified host Ernie Johnson as Black, their humorous reaction sparked broader conversations about algorithmic bias and the current limitations of AI systems that organizations are rapidly adopting.

Key insights from the incident:

-

AI systems still struggle with basic identification tasks – Despite billions in development, Google's AI incorrectly categorized Ernie Johnson's race, revealing fundamental flaws in how these systems interpret and categorize human characteristics.

-

Public visibility of AI failures is increasing – The lighthearted response from the Inside the NBA crew (particularly Shaquille O'Neal and Charles Barkley) demonstrates how AI mistakes are increasingly becoming public conversation topics rather than hidden technical issues.

-

Algorithmic bias remains a persistent challenge – Even major platforms like Google continue to produce biased or incorrect results when handling race, gender, and other human characteristics, despite years of work on the problem.

Why this matters beyond comedy

The most revealing aspect of this incident isn't just the humorous reaction but what it tells us about AI readiness for business applications. When a platform as sophisticated and well-funded as Google's AI can make such a fundamental error about something as basic as a public figure's racial identity, it raises critical questions about AI reliability in more complex business contexts.

This matters tremendously for organizations across sectors. As businesses increasingly deploy AI for everything from customer service to hiring decisions, the possibility of embedded bias or simple factual errors poses significant risks. A hiring AI that misidentifies candidate characteristics could create legal exposure. A customer service AI that makes similarly flawed assumptions might damage relationships with key market segments.

The stakes extend beyond just embarrassment or viral moments. Studies from MIT and Stanford have consistently shown that even the most advanced vision and language AI systems exhibit measurable biases in how they process human characteristics including race, gender, and age. The business implications range from regulatory concerns to reputation damage and lost opportunities.

Beyond what the video showed

What makes this challenge particularly difficult is that bias often appears in unexpected places. Consider Microsoft's

Recent Videos

How To Earn MONEY With Images (No Bullsh*t)

Smart earnings from your image collection In today's digital economy, passive income streams have become increasingly accessible to creators with various skill sets. A recent YouTube video cuts through the hype to explore legitimate ways photographers, designers, and even casual smartphone users can monetize their image collections. The strategies outlined don't rely on unrealistic promises or complicated schemes—instead, they focus on established marketplaces with proven revenue potential for image creators. Key Points Stock photography platforms like Shutterstock, Adobe Stock, and Getty Images remain viable income sources when you understand their specific requirements and optimize your submissions accordingly. Specialized marketplaces focusing...

Oct 3, 2025New SHAPE SHIFTING AI Robot Is Freaking People Out

Liquid robots will change everything In the quiet labs of Carnegie Mellon University, scientists have created something that feels plucked from science fiction—a magnetic slime robot that can transform between liquid and solid states, slipping through tight spaces before reassembling on the other side. This technology, showcased in a recent YouTube video, represents a significant leap beyond traditional robotics into a realm where machines mimic not just animal movements, but their fundamental physical properties. While the internet might be buzzing with dystopian concerns about "shape-shifting terminators," the reality offers far more promising applications that could revolutionize medicine, rescue operations, and...

Oct 3, 2025How To Do Homeless AI Tiktok Trend (Tiktok Homeless AI Tutorial)

AI homeless trend raises ethical concerns In an era where social media trends evolve faster than we can comprehend them, TikTok's "homeless AI" trend has sparked both creative engagement and serious ethical questions. The trend, which involves using AI to transform ordinary photos into images depicting homelessness, has rapidly gained traction across the platform, with creators eagerly jumping on board to showcase their digital transformations. While the technical process is relatively straightforward, the implications of digitally "becoming homeless" for entertainment deserve careful consideration. The video tutorial provides a step-by-step guide on creating these AI-generated images, explaining how users can transform...