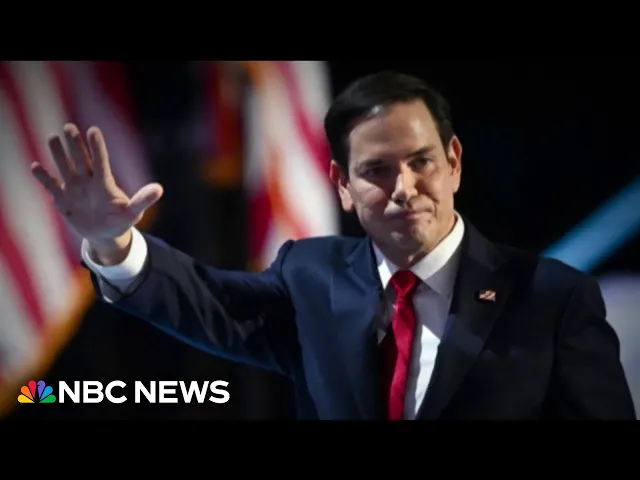

Investigation underway after AI Marco Rubio impostor contacts top officials

AI voice cloning threatens political security

In an alarming development that underscores the growing sophistication of artificial intelligence, an investigation has revealed that someone impersonating Senator Marco Rubio successfully contacted several high-ranking officials, including a U.S. four-star general. This incident marks a concerning escalation in AI-enabled deception, blurring the lines between authentic communication and sophisticated fakery in ways that could have profound implications for national security and political discourse.

The troubling case of the fake Rubio

-

Multiple officials were deceived by an AI-generated voice clone of Senator Marco Rubio, with at least one four-star general engaging in a substantive conversation with the impersonator before realizing something was amiss.

-

The technology behind this deception has rapidly advanced, making voice cloning increasingly convincing and accessible. What once required extensive audio samples and technical expertise can now be accomplished with minimal source material and user-friendly tools.

-

This isn't an isolated incident but part of a growing trend, with similar impersonation attempts targeting other officials and world leaders, signaling a new frontier in disinformation campaigns and security threats.

-

Traditional verification protocols are proving inadequate against these sophisticated AI impersonations, forcing government and military officials to develop new authentication measures and security practices.

Why this matters more than you might think

The most concerning aspect of this incident isn't just that it happened, but what it represents: we've entered an era where voice—long considered a reliable biometric identifier—can no longer be trusted implicitly. This development fundamentally changes how sensitive communications must be handled at the highest levels of government and business.

This matters because our institutions and security protocols haven't evolved at the same pace as AI capabilities. Many organizations still rely on voice recognition as a primary authentication method, and human beings are naturally inclined to trust what they hear when it sounds like someone they know. The psychological impact of this trust exploitation could be devastating in contexts where rapid decision-making is essential.

The timing couldn't be more problematic as we enter a contentious election cycle. Imagine campaign communications being hijacked, fake concession calls being made to opponents, or fabricated statements being released to the media—all with convincing audio that passes initial scrutiny. The potential for democratic disruption is enormous.

Beyond the

Recent Videos

How To Earn MONEY With Images (No Bullsh*t)

Smart earnings from your image collection In today's digital economy, passive income streams have become increasingly accessible to creators with various skill sets. A recent YouTube video cuts through the hype to explore legitimate ways photographers, designers, and even casual smartphone users can monetize their image collections. The strategies outlined don't rely on unrealistic promises or complicated schemes—instead, they focus on established marketplaces with proven revenue potential for image creators. Key Points Stock photography platforms like Shutterstock, Adobe Stock, and Getty Images remain viable income sources when you understand their specific requirements and optimize your submissions accordingly. Specialized marketplaces focusing...

Oct 3, 2025New SHAPE SHIFTING AI Robot Is Freaking People Out

Liquid robots will change everything In the quiet labs of Carnegie Mellon University, scientists have created something that feels plucked from science fiction—a magnetic slime robot that can transform between liquid and solid states, slipping through tight spaces before reassembling on the other side. This technology, showcased in a recent YouTube video, represents a significant leap beyond traditional robotics into a realm where machines mimic not just animal movements, but their fundamental physical properties. While the internet might be buzzing with dystopian concerns about "shape-shifting terminators," the reality offers far more promising applications that could revolutionize medicine, rescue operations, and...

Oct 3, 2025How To Do Homeless AI Tiktok Trend (Tiktok Homeless AI Tutorial)

AI homeless trend raises ethical concerns In an era where social media trends evolve faster than we can comprehend them, TikTok's "homeless AI" trend has sparked both creative engagement and serious ethical questions. The trend, which involves using AI to transform ordinary photos into images depicting homelessness, has rapidly gained traction across the platform, with creators eagerly jumping on board to showcase their digital transformations. While the technical process is relatively straightforward, the implications of digitally "becoming homeless" for entertainment deserve careful consideration. The video tutorial provides a step-by-step guide on creating these AI-generated images, explaining how users can transform...