Prompt Engineering is Dead

The real future of LLM deployment isn't prompts

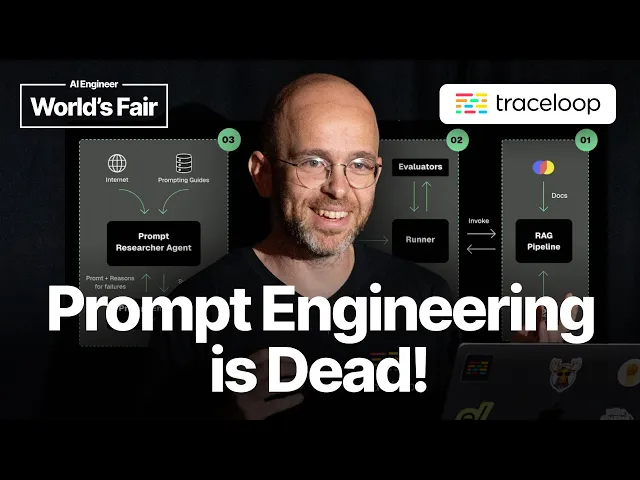

In the quickly evolving landscape of artificial intelligence, particularly with the widespread adoption of large language models, the techniques we use to extract value continue to transform at breakneck speed. Nir Gazit of Traceloop presents a compelling case that prompt engineering—the art of crafting perfect inputs to guide AI responses—may already be fading into obsolescence. What's replacing it could fundamentally reshape how businesses implement AI solutions.

Key Points

-

Prompt engineering is transitioning from a human-centered art form to an automated, fine-tuning approach as models become more sophisticated and the focus shifts to production deployment.

-

The real challenges in AI aren't in crafting the perfect prompt but in building robust production systems that handle observability, monitoring, evaluation, and user feedback loops.

-

Companies are moving toward orchestration frameworks and LLMOps platforms that treat models as commodities while focusing on the reliability and maintenance of AI systems at scale.

-

Building effective AI systems requires embracing a developer-first mindset with proper source control, testing infrastructure, and continuous integration pipelines.

Why Prompt Engineering's Decline Matters

The most insightful takeaway from Gazit's presentation is that the industry is undergoing a fundamental shift from viewing AI as a magical black box requiring perfect instructions to treating it as another software component that needs proper engineering practices. This perspective change is profound because it normalizes AI development, making it accessible to conventional software engineering teams rather than requiring specialized prompt engineers or ML experts.

This matters tremendously in today's business context. As more companies rush to implement AI solutions, they're discovering that the initial excitement of crafting clever prompts quickly gives way to the complex reality of maintaining these systems in production. According to a recent McKinsey survey, while over 60% of organizations are experimenting with generative AI, fewer than 25% have successfully deployed these solutions at scale—precisely because they underestimated the engineering challenges Gazit highlights.

What's Missing from the Conversation

While Gazit's analysis is spot-on regarding the technical evolution, it overlooks an important human factor: the organizational change management required for this transition. Many companies have invested heavily in training prompt engineers and developing prompt management systems. The pivot toward engineering-focused approaches requires not just new

Recent Videos

How To Earn MONEY With Images (No Bullsh*t)

Smart earnings from your image collection In today's digital economy, passive income streams have become increasingly accessible to creators with various skill sets. A recent YouTube video cuts through the hype to explore legitimate ways photographers, designers, and even casual smartphone users can monetize their image collections. The strategies outlined don't rely on unrealistic promises or complicated schemes—instead, they focus on established marketplaces with proven revenue potential for image creators. Key Points Stock photography platforms like Shutterstock, Adobe Stock, and Getty Images remain viable income sources when you understand their specific requirements and optimize your submissions accordingly. Specialized marketplaces focusing...

Oct 3, 2025New SHAPE SHIFTING AI Robot Is Freaking People Out

Liquid robots will change everything In the quiet labs of Carnegie Mellon University, scientists have created something that feels plucked from science fiction—a magnetic slime robot that can transform between liquid and solid states, slipping through tight spaces before reassembling on the other side. This technology, showcased in a recent YouTube video, represents a significant leap beyond traditional robotics into a realm where machines mimic not just animal movements, but their fundamental physical properties. While the internet might be buzzing with dystopian concerns about "shape-shifting terminators," the reality offers far more promising applications that could revolutionize medicine, rescue operations, and...

Oct 3, 2025How To Do Homeless AI Tiktok Trend (Tiktok Homeless AI Tutorial)

AI homeless trend raises ethical concerns In an era where social media trends evolve faster than we can comprehend them, TikTok's "homeless AI" trend has sparked both creative engagement and serious ethical questions. The trend, which involves using AI to transform ordinary photos into images depicting homelessness, has rapidly gained traction across the platform, with creators eagerly jumping on board to showcase their digital transformations. While the technical process is relatively straightforward, the implications of digitally "becoming homeless" for entertainment deserve careful consideration. The video tutorial provides a step-by-step guide on creating these AI-generated images, explaining how users can transform...