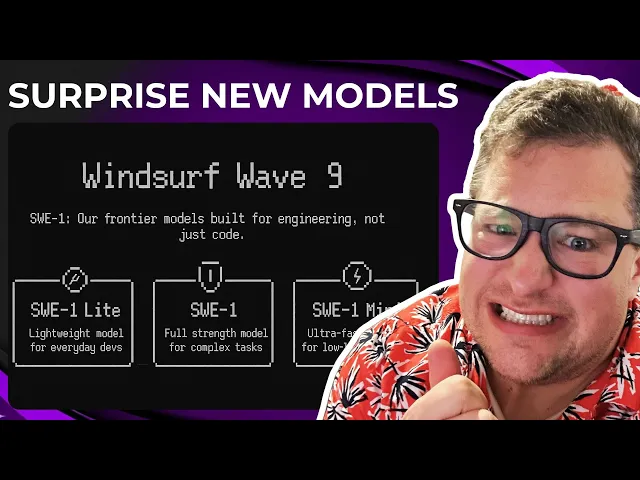

Windsurf’s new AI Models are … interesting

Windsoul AI overturns the competition landscape

In the rapidly evolving artificial intelligence landscape, new models emerge with surprising capabilities that challenge our assumptions about what's possible. The recent introduction of Windsurf's new AI models represents such a moment, potentially reshaping competitive dynamics in a field dominated by familiar names like OpenAI and Anthropic. This development could signal a significant shift in how we evaluate and deploy AI systems in real-world applications.

Key insights from Windsurf's breakthrough

-

Windsurf's new SWE-1 model demonstrates exceptional performance on programming tasks while using substantially fewer parameters than competing models, suggesting more efficient architectural design

-

The model exhibits impressive contextual understanding, producing not just working code but coherent natural language explanations that reveal substantial reasoning capabilities

-

While other models may still lead in some metrics, Windsurf's approach signals a potential paradigm shift where parameter count becomes less important than architectural innovation

The most fascinating aspect of Windsurf's achievement lies in what it reveals about the AI development landscape. Unlike established players with massive resources, Windsurf appears to have taken a fundamentally different approach to model architecture rather than simply scaling existing techniques with more computing power. This suggests we may be entering a new phase of AI development where cleverness trumps brute force.

This matters enormously for the industry as a whole. If smaller, more efficient models can achieve comparable results to massive systems, it democratizes access to advanced AI capabilities. Companies without the resources of OpenAI or Google could potentially develop competitive offerings, broadening the marketplace and accelerating innovation through diverse approaches rather than pure computing scale.

What's particularly noteworthy about this development is how it challenges the "bigger is better" narrative that has dominated AI research in recent years. Since GPT-3 demonstrated the power of scale, the focus has been on increasing parameter counts and training data, with each new model boasting ever-larger numbers. Windsurf's approach suggests there might be significant inefficiencies in these massive models that clever engineering can eliminate.

Consider DeepMind's recent research on "sparse models" that activate only a portion of their parameters for any given task. This work supports the idea that much of a large model's capacity may be redundant for specific applications. Windsurf may have found a way to specifically optimize for

Recent Videos

How To Earn MONEY With Images (No Bullsh*t)

Smart earnings from your image collection In today's digital economy, passive income streams have become increasingly accessible to creators with various skill sets. A recent YouTube video cuts through the hype to explore legitimate ways photographers, designers, and even casual smartphone users can monetize their image collections. The strategies outlined don't rely on unrealistic promises or complicated schemes—instead, they focus on established marketplaces with proven revenue potential for image creators. Key Points Stock photography platforms like Shutterstock, Adobe Stock, and Getty Images remain viable income sources when you understand their specific requirements and optimize your submissions accordingly. Specialized marketplaces focusing...

Oct 3, 2025New SHAPE SHIFTING AI Robot Is Freaking People Out

Liquid robots will change everything In the quiet labs of Carnegie Mellon University, scientists have created something that feels plucked from science fiction—a magnetic slime robot that can transform between liquid and solid states, slipping through tight spaces before reassembling on the other side. This technology, showcased in a recent YouTube video, represents a significant leap beyond traditional robotics into a realm where machines mimic not just animal movements, but their fundamental physical properties. While the internet might be buzzing with dystopian concerns about "shape-shifting terminators," the reality offers far more promising applications that could revolutionize medicine, rescue operations, and...

Oct 3, 2025How To Do Homeless AI Tiktok Trend (Tiktok Homeless AI Tutorial)

AI homeless trend raises ethical concerns In an era where social media trends evolve faster than we can comprehend them, TikTok's "homeless AI" trend has sparked both creative engagement and serious ethical questions. The trend, which involves using AI to transform ordinary photos into images depicting homelessness, has rapidly gained traction across the platform, with creators eagerly jumping on board to showcase their digital transformations. While the technical process is relatively straightforward, the implications of digitally "becoming homeless" for entertainment deserve careful consideration. The video tutorial provides a step-by-step guide on creating these AI-generated images, explaining how users can transform...